|

It is the last week for my technical animation course. I have got different ideas of how various aspect people are researching on nowadays, it is nice to see everyone comes out with some results of what they learned as well. Also, sometimes the result might not come out with what you want, but the process is important to be documented. Moreover, for better understanding, in terms of computer graphics, try to compare the result and help the audience to visualize it. It's a wrap! Can't wait to see more new papers coming out in SIGGRAPH and more conference!

0 Comments

To keep it fresh, we have looked at some latest paper just published in 2019 in Eurographics and SIGGRAPH. It is nice to see some follow up of some topics that we have touched on in the previous classes in this semester. I have put up the link for references and papers of SIGGRAPH 2019. Also the technical paper reviews video are just up online! I will be participating in this year SIGGRAPH, and it is my first time, so I am very excited! SIGGRAPH 2019 references: http://kesen.realtimerendering.com/sig2019.html

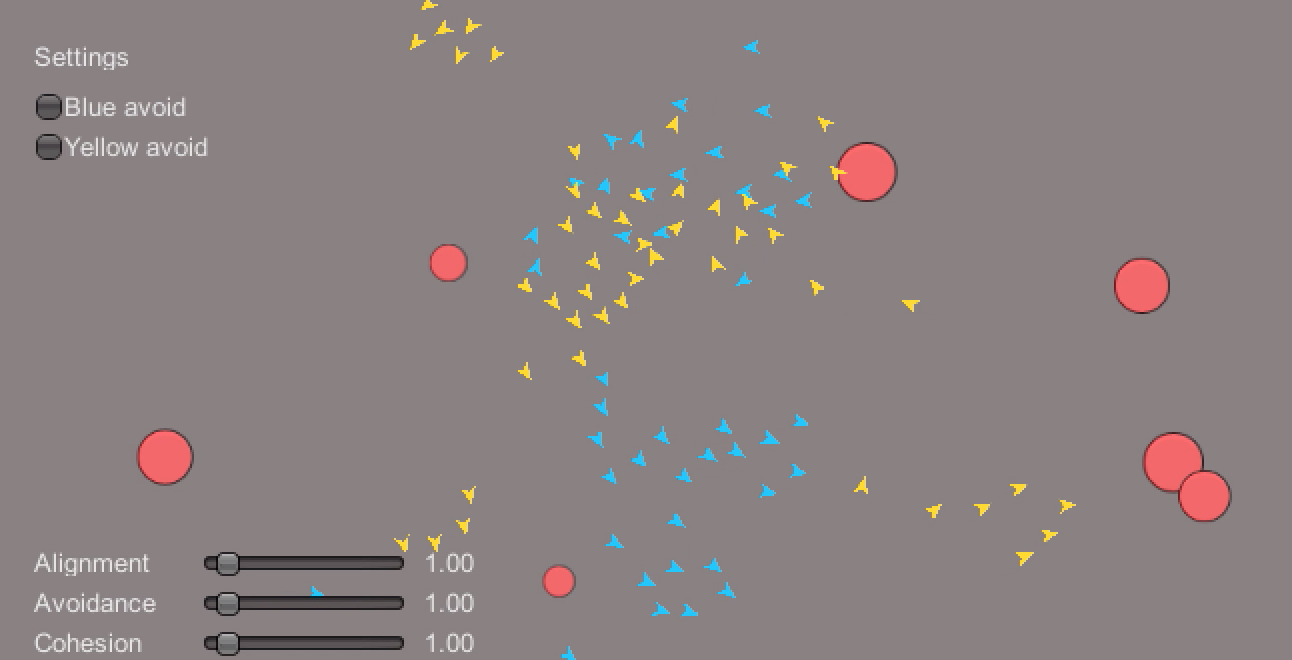

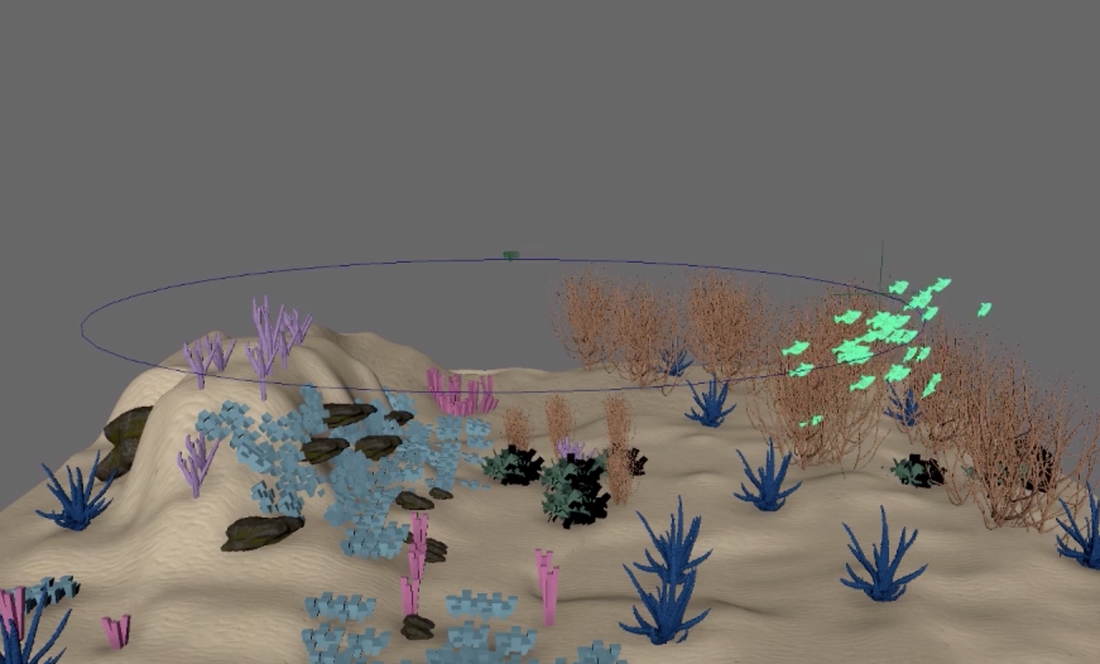

We start from the last group of paper presentation about how data learning methods are used. And then we move on to talk about crowds. The crowd models start with simple forced based model and the famous Craig Reynold's boid simulation is introduced. My final project is also doing the boid simulation and I would share some of my insights as well. There are three rules in boid algorithm to simulate how bird flock around in real world: Cohesion, Alignment, Separation. Cohesion: In order to group the boids, compute the average position of its neighbors and steer to that position. Alignment: In order to show boids align together, calculate average velocity of neighbors and apply this as a force on the boid. Separation: In order to prevent overcrowding, each boid applies a repulsive force in opposite direction to its neighbor. The force is scaled by the inverse of the distance. Besides implement in Unity3D, I have also tried to use the Maya Mash plugin to simulate fish swarming. However, it is harder than I thought to simulate the fish close to the result I would like to have, and based on those three rules there are more to look at. Unity result with UI Maya Mash result - Fish following the motion path Boid simulation has been further used in animation film, data visualization in stock market or interactive museum design, and to the area in robotics to simulate how creature flies or swarms. Crowd simulation is also based on this idea, and besides look at a large amount of result, some research also focused on detailed structure of individual behaviors. One of the paper mentions adding the shoulder motion would give a better perception of realism for crowd simulation, and the result looks pretty different with and without the shoulder movement when people are passing each other. It is interesting to see people are working on detailed motions in large dimension of crowd simulation. In 21st century, deep learning/machine learning is a buzz word and almost every area of computing is trying to add in this new component in. It is interesting to see learning methods are used in animation aspect as well. Most of the papers are published in recent years and there are different results for character control specifically. The learning bicycle stunts paper is an interesting one. The team has presented an approach to simulate and control a human character riding a bike. The rider not only learns to steer and to balance in normal riding situations, but also learns to perform a wide variety of stunts. The rider is optimized through offline learning. From the result, you could see the rider is able to balance while doing some difficult stunts, however, I feel like it is a little bit unrealistic or too prefect in the real world. The learning method normally are introduced to get a more efficient simulation with less iterations of calculation, while applying the learning methods, I think sometimes for a lot of different application of learning method, it is important to evaluate the usability and availability instead of just following the trend.

Link to bicycle stunt paper: https://dl.acm.org/citation.cfm?id=2601121&picked=formats Since I focus more on physic based simulation, I do not have that much of an idea about character control itself. Some technical part I might not understand that much, however, it is nice to see the history and development of this topic. For the computational bodybuilding paper published in 2015, the team propose a modeling approach based on anatomy, however, I feel like the result it is not that realistic perhaps it is because the model they have chosen or maybe they have gone for some extreme type of body type with huge amount of fat or muscle which normal human being would not obtain. Moreover, we move on to how to control the character and it is my first time learning about locomotion. The Locomotion System is about making walking and running look better and more believable without requiring lots of animations. The system normally blends motion-captured walking or running cycles with keyframes ones and then adjusts the movements of the bones in legs to ensure that the feet step accurately on the ground. Unity has its own locomotion system and I will like to look into that and further research as well. This week we start from presentation session. One paper caught my interest "Toward Wave-based Sound Synthesis for Computer Animation". The goal is to propose an approach to generate sound for physic based simulation. They seek to resolve animation-driven sound radiation with near-field scattering and diffraction effects. This result in their video was pretty successful and in my opinion I think it will be really good for sound designer in the future, since they will have a better output for the sound as well. In later of the week, we learned about facial animation and the idea of blend shapes. One of the Facebook's result I have seen in the Facebook Pittsburgh reality lab as well. Facebook is researching in deep appearance models for face rendering. The result is quite appealing and it will be a huge step for VR world as well. Also, when I was in the reality lab, one of the researcher has mentioned that one of the difficulty is to capture hair rendering due to the complexity of hair strands. Therefore there demo video does not have any hair for their avatar. We learned about deformables this week. I have not touched on this topic before and it is quite new to me. And I would like to share more of my thoughts on the paper "Projective Dynamics: Fusing Constraint Projections for Fast Simulation". I am intrigued by the last video of jelly like house, bending grass and trees. They propose a new "projection-based" implicit Euler integrator that supports a large variety of geometric constraints in a single physical simulation framework. Compared to different solver, the solver they propose seems to have a more efficient computational time than the newton based solver, and the quality of the result seems to be similar. With only ten iterations, the result is already good enough. This will be a good approach for real time application which high accuracy is not the priority but the time. A paper about deformables and exact energy conservation. The exact energy conservation means that the animation will not explode and will not suffer from numerical damping which is a common scenario in numerical iteration techniques. We looked at different result for other classmate's result. A lot of people have presented the cloth simulation. And it is interesting to see how changing the timestep will make a huge difference. Some people present the result with different integretors. I think so far the Verlet integretor does the job!

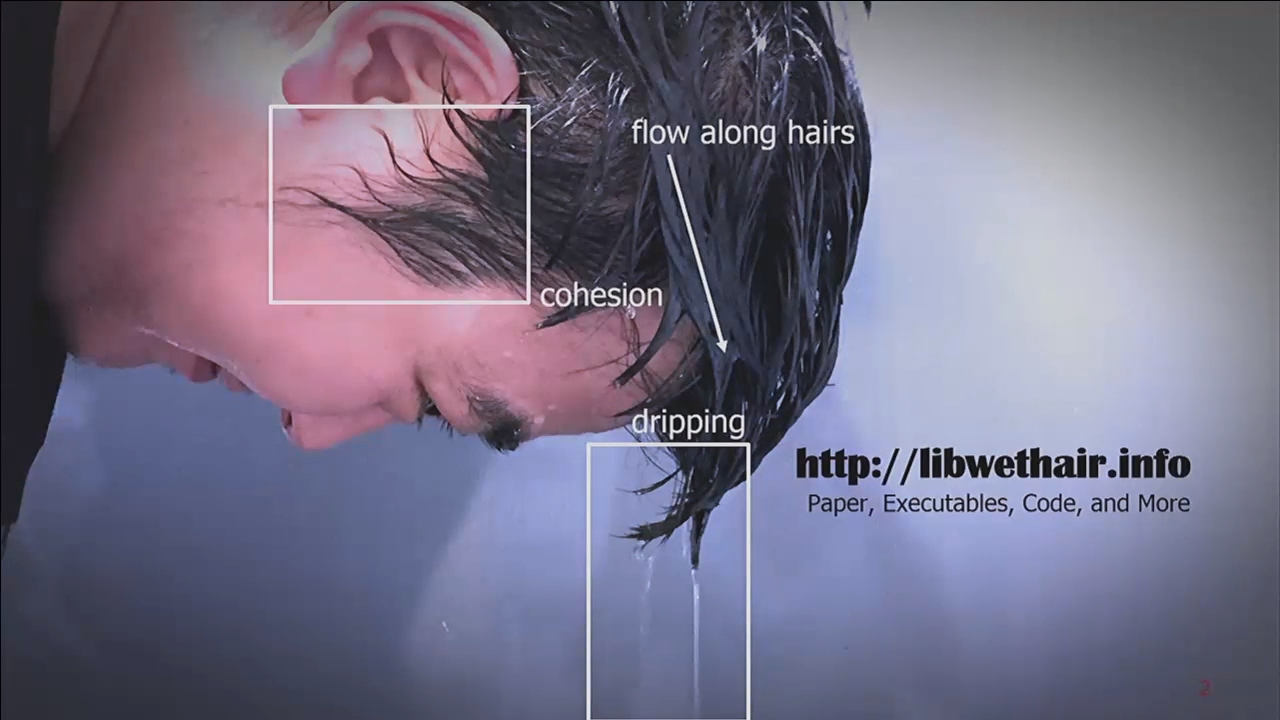

This week I presented the paper about "A multi-scale model for simulating liquid-hair interactions" from SIGGRAPH 2017. I figured out I will discussed more about this here. The main author of this is Yun (Raymond) Fei. He also published another paper about liquid with fabric interactions in 2018 SIGGRAPH. The main goal of this paper is to replicate the realistic situation of hair interacting with water and how it flows along each hair strand. In the animation industry, it is still very hard to simulate the same situation as detailed as possible for hair and water. We could see a lot of examples in the animation like Wreck it ralph 2 that the little mermaid hair and water is created totally separate and combine together in the composition stage, and therefore there is not really a realistic interaction between them rather than just a texture change. (See the below image) Therefore, Raymond's team wants to research into three main characteristics of hair and water interaction:

In his video, he has talk more about the exact math behind these three parts and you could know more from there. Some more thoughts about this paper:

Overall I think there are positive results in liquid interactions focusing different components in this paper, and if developed, it will be helpful for artist to create realistic and more natural interactions. However, one of my doubt is that it seems to focus too much on the hair tip details and the result of the whole hair simulation seems very puffy, and I would think that wet hair sticks to the head more. In the animated short I am working on this semester is about the water simulation and therefore I am interested to learn about the history of fluid simulation. It is interested to see how fluid simulation has developed throughout the years. The fluid simulation could also be used in smoke simulation or snow simulation with different fluid control. There are mainly two types of approach, one is particle based and one is grid based system. We firstly talked about methods like MP and LS and then moves to PIC, FLIP, APIC which are the methods between particle based and grid based and lastly we talked about SPH. Below I want to show the result of different techniques in fluid simulation. (From the paper "The affine particle-in-cell method" ) Personally, I think FLIP suffers more noise and APIC seems to be more smooth and it have the proper result of realistic fluid behavior and the shape preserves better as well.

This week we learned about to extend a particle system into spring/mass cloth simulation. It is interested to learn about all the different integrators like Euler, RK4, Verlet and how maya design their dynamics systems for example nCloth, since I am doing the hair simulation with Maya's nHair system. There are different simulation engine in the market and I wish to explore in the future. We also looked through different recent papers. One that caught my eye is the "Interactive Design of Periodic Yarn-Level Cloth Patterns" published in SIGGRAPH 2018. I have not seen any cloth research specifically focused on patterns and how thicker cloth has been like. Most of the cloth been created and represented is a very smooth and thin layer. It is great to see people have researched in this area. Below is the video of this paper. This week there are more sharing session on paper published recently. One thing I feel first after presentation is that in animation side of research, there are so many different fields and people working on polishing that field. I am amazed by how detailed it could be in this industry.

There are three papers being presented in the class. A new example-based approach for synthesizing hand-colored cartoon animations, An empirical rig for jaw animation, Language-driven synthesis of 3D scenes from scene databases. All these are very helpful for realizing in animation industry. The one I am mostly interested about is the set creation one presented by Iris. I think if further developed, the language driven model setup could be helpful for prototyping environment. This week besides learning more about IK, we saw more papers that are published about rigging and skinning. One paper caught my attention is the MIT automatic rigged system called Pinocchio. In my experience, rigging in Maya is sometimes troublesome when you are doing the weight painting, since it is hard to control the paint brush when painting and it takes time to try and test. If the Pinocchio system could be more developed and accurate, I think it will be saving a lot of time for the rigging part. Since I have not tried out the system yet and the paper is relatively old, I have no idea if the system has fixed the limitation yet. It seems like although there is automatic rigs, the texture of the different character's clothing might not be able to show using auto rig. Therefore, if there is parameter that could be adjust and for rigger to play around could be important. This week we were introduced to the different method of inverse kinematics (IK) and the math equation about it of how to compute. One thing mentioned in the class is the difference of inverse kinematics (IK) and forward kinematics (FK). I did animation before in Maya and I never try IK control before, and therefore after understanding the mathematics knowledge behind it, I did some more research on how do IK and FK actually in use while animating in software. Apparently, when moving FK, all the children joints under that joint will move together and it is better when animating hands and arms, as for moving in IK, joints move in line trajectories, and sometimes it is better to animate in this way to show a more natural way of interaction. For legs animation, it is recommended to use IK. Also, there might be sometime that IK and FK are switched in different frame of that animation sequence. This is the video I found and I find it interesting to learn from an animator side of IK and FK.

This week we went to the motion capture lab and Justin has showed us how the markers work with the camera. It is very cool to see in person how the motion capture works and how it shows on the software. I only saw some behind the scene videos of movie’s motion capture, however, I do not know in this area there are still a lot of obstacles like the markers might easily fall off if movement is too big and requires a lot of post processing due to some blocking issue. I am also amazed by if we are going to track human’s face movement, 120-150 markers are required. Moreover, tracking full body or face only requires different build up of the camera setting. Motion capture seems to use a lot on researching human anatomy and simulating human’s gesture. All these are further use on robotics and biotech area. A recent video I have watched about motion capture in the industry of gaming. I also found this interesting video about the evolution of motion capture. It is obvious that there are a lot of improvements and the tracking is more accurate. However, I am curious about the future prospect for the rigger and animator in the industry. This is the first week and we mainly talked about how different animation techniques are used in the industry and research. Personally, I have experience in Maya to do the animation. I found that the keyframing techniques when animating a human requires a lot of knowledge of human anatomy and how human does different behaviors. Moreover, after adding the rig (skeleton) to the model, painting the weights of each joint is hard and sometimes cost a lot of time. I am interested to see that there are different techniques like motion capture to achieve the same result. Specifically, I think physics-based animation will play an important role in the future and reduce a lot of work from the artist side. With proper simulation of fluid, cloth, hair etc, there are more potential for the animation industry. A lot of animation have been combined with the recent hot topic like machine learning and AI. Cascadeur is a unique physics-based animation software I saw online that gives the animators the ability to create realistic action sequences of any complexity. This might change how animators work in the future. |

The site will mainly share about what I learned and found interesting in Technical animation class.Categories |